library(bcn)

library(randomForest)

#> randomForest 4.7-1.1

#> Type rfNews() to see new features/changes/bug fixes.

library(pROC)

#> Type 'citation("pROC")' for a citation.

#>

#> Attaching package: 'pROC'

#> The following objects are masked from 'package:stats':

#>

#> cov, smooth, varA few years ago in 2018, I discussed Boosted Configuration (neural) Networks (BCN, for multivariate time series forecasting) in this document. Unlike Stochastic Configuration Networks from which they are inspired, BCNs aren’t randomized. Rather, they are closer to Gradient Boosting Machines and Matching Pursuit algorithms; with base learners being single-layered feedforward neural networks – that are actually optimized at each iteration of the algorithm.

The mathematician that you are has certainly been asking himself

questions about the convexity of the problem at line 4, algorithm 1 (in

the

document). As of July 2022, there are unfortunately no answers to

that question. BCNs works well empirically, as we’ll

see, and finding the maximum at line 4 of the algorithm is achieved, by

default, with R’s stats::nlminb. Other derivative-free

optimizers are available in R package

bcn.

As it will be shown in this document, BCNs can be used for

classification. For this purpose, and as implemented in R package

bcn, the response (variable to be explained) containing

the classes is one-hot encoded as a matrix of probabilities equal to 0

or 1. Then, the classification technique dealing with a one-hot encoded

response matrix is similar to the one presented in

this post.

3 toy datasets are used for this basic demo of R package

bcn: Iris, Wine, Penguins. For each dataset, hyperparameter

tuning has already been done. Repeated 5-fold cross-validation was

carried out on 80% of the data, for each dataset, and the accuracy

reported in the table below is calculated on the remaining 20% of the

data. BCN results are compared to Random

Forest’s (with default parameters), in order to verify that BCN

results are not absurd – it’s not a competition between Random Forest

and BCN here.

The future for R package

bcn (in no particular order)?

- Implement BCN for regression (a continuous response)

- Improve the speed of execution for high dimensional problems

- Implement a Python version

| Dataset | BCN test set Accuracy | Random Forest test set accuracy |

|---|---|---|

| iris | 100% | 93.33% |

| Wine | 97.22% | 94.44% |

| Penguins | 100% | 100% |

Content

0 - Installing and loading packages

Installing bcn From Github:

devtools::install_github("Techtonique/bcn")

# Browse the bcn manual pages

help(package = 'bcn')Installing bcn from R universe:

# Enable repository from techtonique

options(repos = c(

techtonique = 'https://techtonique.r-universe.dev',

CRAN = 'https://cloud.r-project.org'))

# Download and install bcn in R

install.packages('bcn')

# Browse the bcn manual pages

help(package = 'bcn')Loading packages:

library(bcn)

library(randomForest)

library(pROC)1 - iris dataset

data("iris")

head(iris)

#> Sepal.Length Sepal.Width Petal.Length Petal.Width Species

#> 1 5.1 3.5 1.4 0.2 setosa

#> 2 4.9 3.0 1.4 0.2 setosa

#> 3 4.7 3.2 1.3 0.2 setosa

#> 4 4.6 3.1 1.5 0.2 setosa

#> 5 5.0 3.6 1.4 0.2 setosa

#> 6 5.4 3.9 1.7 0.4 setosa

dim(iris)

#> [1] 150 5

set.seed(1234)

train_idx <- sample(nrow(iris), 0.8 * nrow(iris))

X_train <- as.matrix(iris[train_idx, -ncol(iris)])

X_test <- as.matrix(iris[-train_idx, -ncol(iris)])

y_train <- iris$Species[train_idx]

y_test <- iris$Species[-train_idx]

ptm <- proc.time()

fit_obj <- bcn::bcn(x = X_train, y = y_train, B = 10L, nu = 0.335855,

lam = 10**0.7837525, r = 1 - 10**(-5.470031), tol = 10**-7,

activation = "tanh", type_optim = "nlminb", show_progress = FALSE)

cat("Elapsed: ", (proc.time() - ptm)[3])

#> Elapsed: 0.43

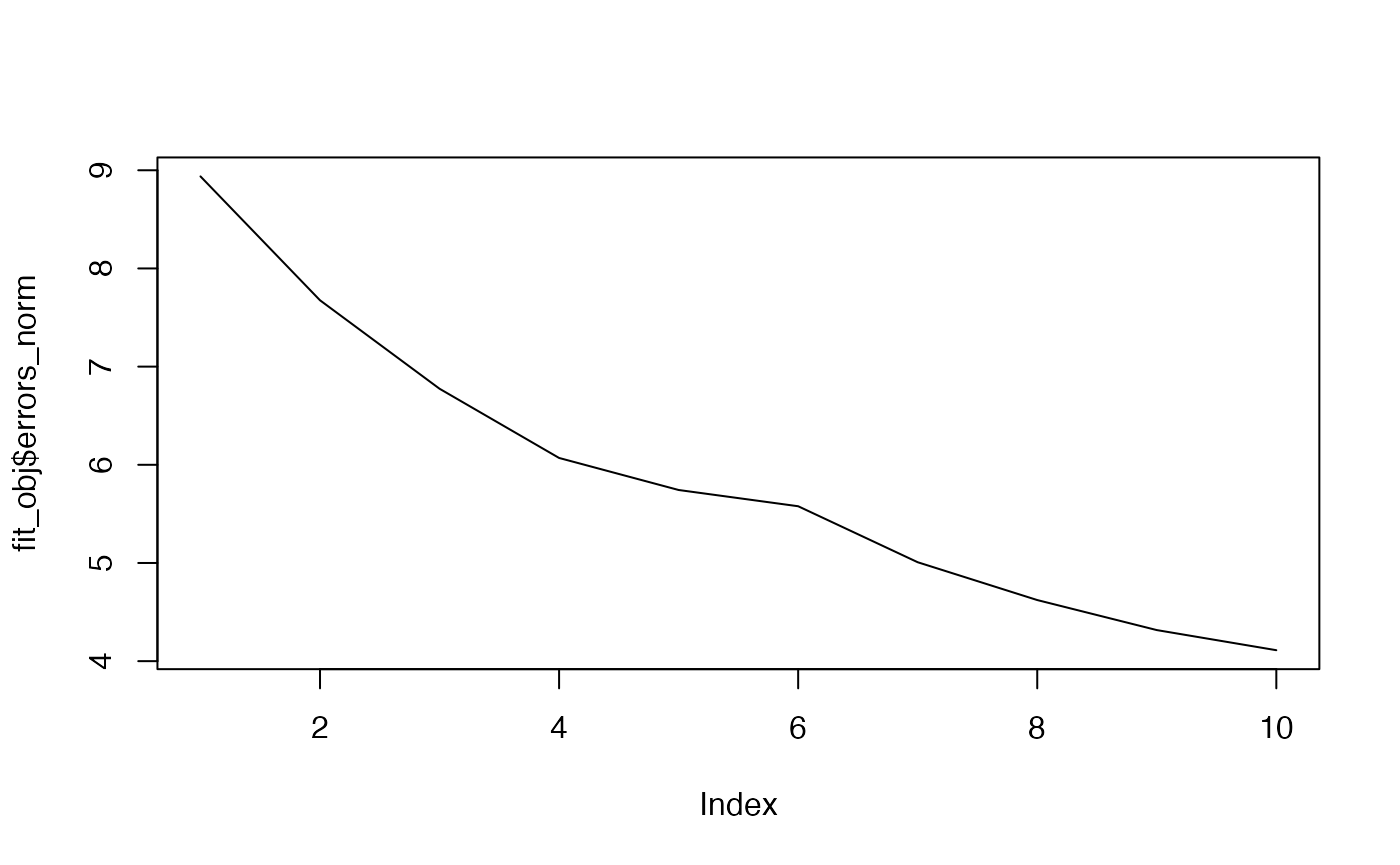

plot(fit_obj$errors_norm, type='l')

preds <- predict(fit_obj, newx = X_test)

mean(preds == y_test)

#> [1] 1

table(y_test, preds)

#> preds

#> y_test setosa versicolor virginica

#> setosa 13 0 0

#> versicolor 0 8 0

#> virginica 0 0 9

rf <- randomForest::randomForest(x = X_train, y = y_train)

mean(predict(rf, newdata=as.matrix(X_test)) == y_test)

#> [1] 0.9333333

print(head(predict(fit_obj, newx = X_test, type='probs')))

#> setosa versicolor virginica

#> [1,] 0.4024654 0.3174903 0.2800443

#> [2,] 0.4002180 0.3188798 0.2809022

#> [3,] 0.3993414 0.3209437 0.2797149

#> [4,] 0.4072561 0.3125790 0.2801649

#> [5,] 0.4023243 0.3167276 0.2809481

#> [6,] 0.4043465 0.3152716 0.2803818

print(head(predict(rf, newdata=as.matrix(X_test), type='prob')))

#> setosa versicolor virginica

#> 1 1.000 0.000 0

#> 7 1.000 0.000 0

#> 12 1.000 0.000 0

#> 15 0.946 0.054 0

#> 18 1.000 0.000 0

#> 23 1.000 0.000 02- wine dataset

data(wine)

head(wine)

#> alcohol malic_acid ash alcalinity_of_ash magnesium total_phenols flavanoids

#> 1 14.23 1.71 2.43 15.6 127 2.80 3.06

#> 2 13.20 1.78 2.14 11.2 100 2.65 2.76

#> 3 13.16 2.36 2.67 18.6 101 2.80 3.24

#> 4 14.37 1.95 2.50 16.8 113 3.85 3.49

#> 5 13.24 2.59 2.87 21.0 118 2.80 2.69

#> 6 14.20 1.76 2.45 15.2 112 3.27 3.39

#> nonflavanoid_phenols proanthocyanins color_intensity hue

#> 1 0.28 2.29 5.64 1.04

#> 2 0.26 1.28 4.38 1.05

#> 3 0.30 2.81 5.68 1.03

#> 4 0.24 2.18 7.80 0.86

#> 5 0.39 1.82 4.32 1.04

#> 6 0.34 1.97 6.75 1.05

#> od280.od315_of_diluted_wines proline target

#> 1 3.92 1065 1

#> 2 3.40 1050 1

#> 3 3.17 1185 1

#> 4 3.45 1480 1

#> 5 2.93 735 1

#> 6 2.85 1450 1

dim(wine)

#> [1] 178 14

set.seed(1234)

train_idx <- sample(nrow(wine), 0.8 * nrow(wine))

X_train <- as.matrix(wine[train_idx, -ncol(wine)])

X_test <- as.matrix(wine[-train_idx, -ncol(wine)])

y_train <- as.factor(wine$target[train_idx])

y_test <- as.factor(wine$target[-train_idx])

ptm <- proc.time()

fit_obj <- bcn::bcn(x = X_train, y = y_train, B = 6L, nu = 0.8715725,

lam = 10**0.2143678, r = 1 - 10**(-6.1072786),

tol = 10**-4.9605713, show_progress = FALSE)

cat("Elapsed: ", (proc.time() - ptm)[3])

#> Elapsed: 0.592

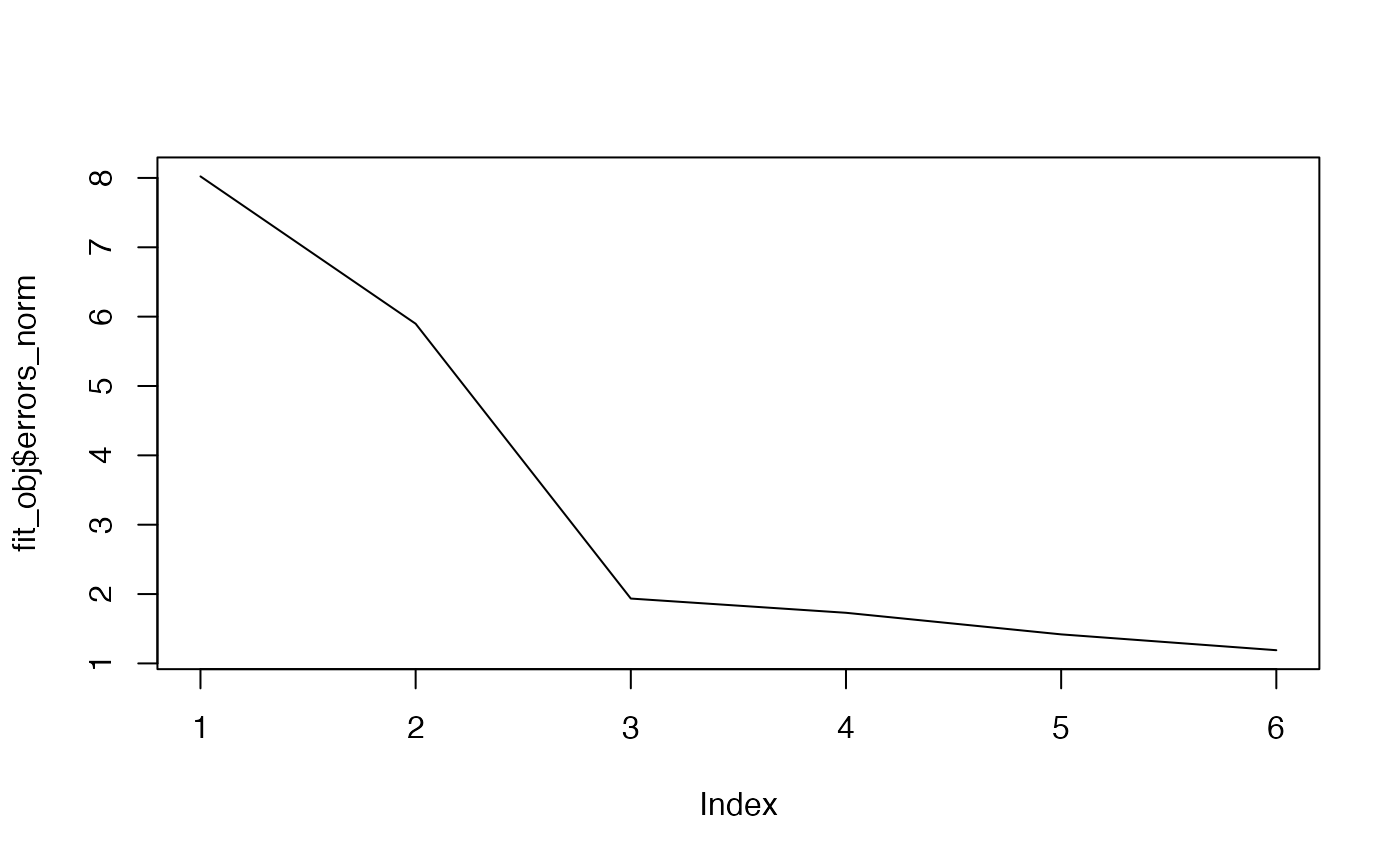

plot(fit_obj$errors_norm, type='l')

preds <- predict(fit_obj, newx = X_test)

mean(preds == y_test)

#> [1] 0.9722222

table(y_test, preds)

#> preds

#> y_test 1 2 3

#> 1 14 0 0

#> 2 0 15 1

#> 3 0 0 6

rf <- randomForest::randomForest(x = X_train, y = y_train)

mean(predict(rf, newdata=as.matrix(X_test)) == y_test)

#> [1] 0.9444444

print(head(predict(fit_obj, newx = X_test, type='probs')))

#> 1 2 3

#> [1,] 0.4243481 0.2896224 0.2860294

#> [2,] 0.4007695 0.2951041 0.3041265

#> [3,] 0.4229677 0.2896510 0.2873814

#> [4,] 0.4234005 0.2893842 0.2872153

#> [5,] 0.4200538 0.2880944 0.2918518

#> [6,] 0.4185796 0.2888308 0.2925896

print(head(predict(rf, newdata=as.matrix(X_test), type='prob')))

#> 1 2 3

#> 1 0.998 0.002 0.000

#> 5 0.524 0.400 0.076

#> 12 0.768 0.202 0.030

#> 13 0.918 0.070 0.012

#> 18 0.928 0.058 0.014

#> 31 0.902 0.092 0.0063 - Penguins dataset

data("penguins")

penguins_ <- as.data.frame(penguins)

replacement <- median(penguins$bill_length_mm, na.rm = TRUE)

penguins_$bill_length_mm[is.na(penguins$bill_length_mm)] <- replacement

replacement <- median(penguins$bill_depth_mm, na.rm = TRUE)

penguins_$bill_depth_mm[is.na(penguins$bill_depth_mm)] <- replacement

replacement <- median(penguins$flipper_length_mm, na.rm = TRUE)

penguins_$flipper_length_mm[is.na(penguins$flipper_length_mm)] <- replacement

replacement <- median(penguins$body_mass_g, na.rm = TRUE)

penguins_$body_mass_g[is.na(penguins$body_mass_g)] <- replacement

# replacing NA's by the most frequent occurence

penguins_$sex[is.na(penguins$sex)] <- "male" # most frequent

print(summary(penguins_))

#> species island bill_length_mm bill_depth_mm

#> Adelie :152 Biscoe :168 Min. :32.10 Min. :13.10

#> Chinstrap: 68 Dream :124 1st Qu.:39.27 1st Qu.:15.60

#> Gentoo :124 Torgersen: 52 Median :44.45 Median :17.30

#> Mean :43.92 Mean :17.15

#> 3rd Qu.:48.50 3rd Qu.:18.70

#> Max. :59.60 Max. :21.50

#> flipper_length_mm body_mass_g sex year

#> Min. :172.0 Min. :2700 female:165 Min. :2007

#> 1st Qu.:190.0 1st Qu.:3550 male :179 1st Qu.:2007

#> Median :197.0 Median :4050 Median :2008

#> Mean :200.9 Mean :4201 Mean :2008

#> 3rd Qu.:213.0 3rd Qu.:4750 3rd Qu.:2009

#> Max. :231.0 Max. :6300 Max. :2009

print(sum(is.na(penguins_)))

#> [1] 0

# one-hot encoding for covariates

penguins_mat <- model.matrix(species ~., data=penguins_)[,-1]

penguins_mat <- cbind(penguins_$species, penguins_mat)

penguins_mat <- as.data.frame(penguins_mat)

colnames(penguins_mat)[1] <- "species"

print(head(penguins_mat))

#> species islandDream islandTorgersen bill_length_mm bill_depth_mm

#> 1 1 0 1 39.10 18.7

#> 2 1 0 1 39.50 17.4

#> 3 1 0 1 40.30 18.0

#> 4 1 0 1 44.45 17.3

#> 5 1 0 1 36.70 19.3

#> 6 1 0 1 39.30 20.6

#> flipper_length_mm body_mass_g sexmale year

#> 1 181 3750 1 2007

#> 2 186 3800 0 2007

#> 3 195 3250 0 2007

#> 4 197 4050 1 2007

#> 5 193 3450 0 2007

#> 6 190 3650 1 2007

print(tail(penguins_mat))

#> species islandDream islandTorgersen bill_length_mm bill_depth_mm

#> 339 2 1 0 45.7 17.0

#> 340 2 1 0 55.8 19.8

#> 341 2 1 0 43.5 18.1

#> 342 2 1 0 49.6 18.2

#> 343 2 1 0 50.8 19.0

#> 344 2 1 0 50.2 18.7

#> flipper_length_mm body_mass_g sexmale year

#> 339 195 3650 0 2009

#> 340 207 4000 1 2009

#> 341 202 3400 0 2009

#> 342 193 3775 1 2009

#> 343 210 4100 1 2009

#> 344 198 3775 0 2009

y <- as.integer(penguins_mat$species)

X <- as.matrix(penguins_mat[,2:ncol(penguins_mat)])

n <- nrow(X)

p <- ncol(X)

set.seed(1234)

index_train <- sample(1:n, size=floor(0.8*n))

X_train <- X[index_train, ]

y_train <- factor(y[index_train])

X_test <- X[-index_train, ]

y_test <- factor(y[-index_train])

ptm <- proc.time()

fit_obj <- bcn::bcn(x = X_train, y = y_train, B = 23, nu = 0.470043,

lam = 10**-0.05766029, r = 1 - 10**(-7.905866), tol = 10**-7,

show_progress = FALSE)

cat("Elapsed: ", (proc.time() - ptm)[3])

#> Elapsed: 0.522

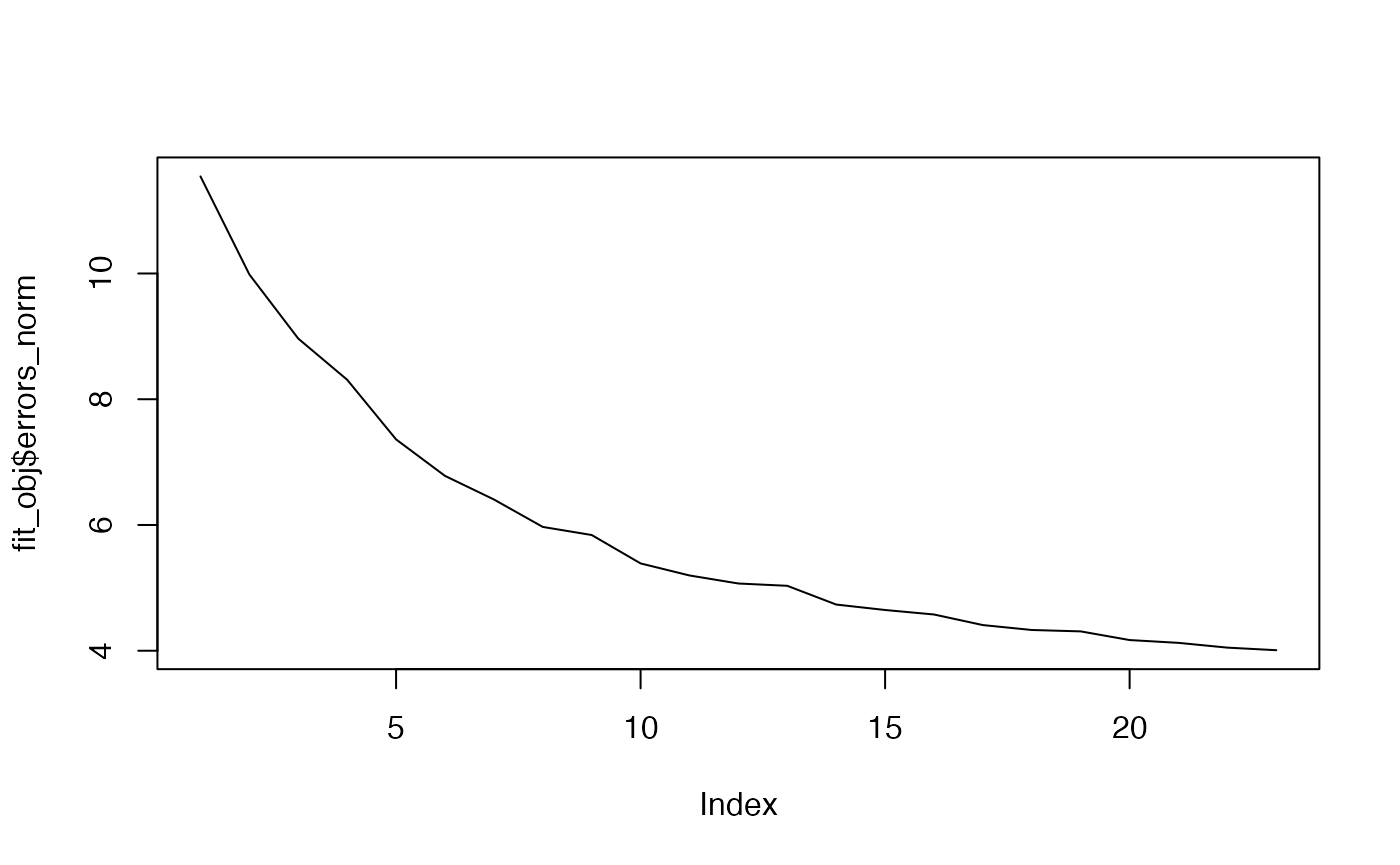

plot(fit_obj$errors_norm, type='l')

preds <- predict(fit_obj, newx = X_test)

mean(preds == y_test)

#> [1] 1

table(y_test, preds)

#> preds

#> y_test 1 2 3

#> 1 24 0 0

#> 2 0 13 0

#> 3 0 0 32

rf <- randomForest::randomForest(x = X_train, y = y_train)

mean(predict(rf, newdata=as.matrix(X_test)) == y_test)

#> [1] 1

print(head(predict(fit_obj, newx = X_test, type='probs')))

#> 1 2 3

#> [1,] 0.4354771 0.2811665 0.2833565

#> [2,] 0.3977326 0.3181369 0.2841305

#> [3,] 0.4327560 0.2715548 0.2956892

#> [4,] 0.4290288 0.2839554 0.2870158

#> [5,] 0.4298022 0.2811012 0.2890967

#> [6,] 0.4202598 0.2917779 0.2879623

print(head(predict(rf, newdata=as.matrix(X_test), type='prob')))

#> 1 2 3

#> 1 0.998 0.000 0.002

#> 3 0.992 0.008 0.000

#> 8 0.966 0.000 0.034

#> 11 0.990 0.004 0.006

#> 16 1.000 0.000 0.000

#> 18 0.946 0.018 0.036